Hello, and welcome to your Aiden's Lab Notebook.

When multiple developers or teams use the same Kubernetes cluster, applying consistent rules, or policies, is crucial. It not only ensures stable operations but also helps minimize security vulnerabilities. The kind of policies we're talking about include...

Enforcing specific security settings on all workloads,

Limiting resource usage,

And ensuring that required labels are always included.

If these cluster policies are managed only through documentation and rely on developers/operators to follow them manually, it's easy for mistakes (human error) to occur. Besides, if you have to review every Kubernetes resource manifest each time a resource is deployed, it's not great for cluster operational efficiency, right?

This is why a method of defining cluster policies as code and having the system automatically enforce them has emerged. This concept is called Policy as Code(PaC). It's a way to automatically verify that all resources comply with defined policies before they are deployed and to block deployments that violate them, thereby enhancing the cluster's security and stability.

And Kyverno is an open-source Policy Engine created to implement PaC in a Kubernetes cluster.

When Your Kubernetes Cluster Needs Policies, Try Kyverno

Kyverno is an open-source policy engine designed for managing and enforcing policies in a Kubernetes environment. You can think of it as a 'gatekeeper' that detects all resource creation and modification requests coming into the cluster and then allows, blocks, or modifies them based on defined policies.

Kyverno operates based on Kubernetes' Admission Control webhook functionality. To put it more simply, when a user tries to create or modify a Kubernetes resource with a command like kubectl apply, the Kubernetes API server forwards that request to Kyverno to ask for a policy validation. Kyverno then reviews the request's content and tells the Kubernetes API server whether to allow or deny it based on the policies, thus enforcing them.

So, why should we use Kyverno for managing policies in our Kubernetes cluster? Kyverno has some distinct advantages:

Define policies using Kubernetes CRDs like

ClusterPolicyorPolicyPolicies that apply to the entire cluster can be defined with

ClusterPolicy, while policies that apply at the Namespace level can be defined withPolicy.You can create, view, modify, and delete policies using

kubectl, just like you would with regular Kubernetes resources.It's also easy to manage policy versions using a GitOps workflow.

Check policy results through Kubernetes Events or

PolicyReportUsers can easily check the results of policies with familiar commands like

kubectl get eventsorkubectl describe.This makes policy debugging and cluster state auditing more convenient.

Provides resource modification or creation capabilities in addition to blocking resource requests

Besides the ability to block (Validate) requests that violate policies, it also offers a feature to automatically add specific content to resources (Mutate) and a feature to automatically create other related resources when certain conditions are met (Generate).

This helps automate cluster operations and improve developer convenience.

Kyverno offers a variety of features, and in this hands-on guide, we'll be trying out these three core ones:

Validate

Kyverno's most basic rule, it checks if resources being created or modified in the cluster meet defined conditions.

Mutate

Automatically modifies resources, such as adding default values when a specific field is missing in a Kubernetes resource manifest, or applying common labels or annotations to all resources.

Generate

Reacts to the event of a specific resource being created (the trigger) and automatically creates other related resources.

Example: Automatically creating a

NetworkPolicyorRolewhenever a newNamespaceis created.

This hands-on guide is designed to effectively convey these three core features of Kyverno to help those who are curious about or considering adopting Kubernetes policy management or Kyverno.

Furthermore, the core Kyverno features covered in this guide are also key topics for the CNCF's Kyverno Certified Associate (KCA) certification. So, if you're interested in the KCA certification, going through this guide will be a great help in acquiring the necessary knowledge for the exam.

Preperation: Installing Kyverno on a Minikube Cluster

Installing the Kyverno CLI

You might be wondering, if you can create and modify policies with kubectl, why do we need to install the Kyverno CLI?

It's a useful tool because it allows you to test and validate Kyverno policies before applying them directly to the cluster. You can use a policy YAML file and a test resource YAML file to check in advance if the policy works as intended.

You can install the Kyverno CLI directly on your local machine, but it can also be installed as a kubectl plugin via Krew.

Assuming you already have Krew installed, run the following Krew command to install the Kyverno CLI as a kubectl plugin.

kubectl krew install kyverno

You can then verify that the Kyverno CLI is installed correctly with the following command:

kubectl kyverno version

Installing the Kyverno CLI with Krew makes it simple to use, right?

Now it's time to deploy the necessary resources for Kyverno to run on our Kubernetes cluster. We'll be using minikube again for a quick cluster setup.

Don't worry if you're not yet familiar with installing Minikube and configuring a Kubernetes cluster. You can quickly catch up by following Aiden's Lab's minikube hands-on guide, which covers everything from an introduction to installation and usage.

Let's move on to the next step.

Deploying Kyverno with Helm on a minikube Cluster

We'll use Helm to deploy Kyverno to our cluster. As some of you may know, Helm is a Kubernetes package manager that allows you to fetch, create, version, and share a project's resource YAML files from a remote repository without having to store them locally, all from the CLI.

Using Helm makes it easy to deploy Kyverno to the cluster. Since Kyverno's official documentation recommends this method, we'll use Helm to deploy Kyverno as well.

If Helm is not installed in your lab environment, install it first as follows (based on an Ubuntu environment):

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bashThen, run the following Helm commands to deploy Kyverno to your minikube cluster.

helm repo add kyverno https://kyverno.github.io/kyverno/

helm repo update

helm install kyverno kyverno/kyverno -n kyverno --create-namespace

Once the deployment is complete, you need to check if Kyverno's components are running correctly. You can do this by checking the current status of all Kyverno-related Pods with the kubectl -n kyverno get pods command.

As you can see below, all Kyverno-related Pods are in the READY state and have a STATUS of Running, which means all of Kyverno's components are working well.

With both the Kyverno CLI installation and Kyverno deployment complete, let's start our hands-on guide with Kyverno's core features.

Core Feature 1 - Validating Resource Creation Rules (Validate)

The validate rule maintains the consistency of the Kubernetes cluster and reduces potential security risks by blocking resource requests that violate defined policies.

For example, you can create and enforce policies for security best practices like 'all Pods must run as a non-root user' or 'all Ingress must only allow HTTPS'. You can also enforce internal organizational standards, such as 'all resources must have an owner label'.

Let's create a Kyverno ClusterPolicy that includes a validate rule. Let's assume we have the following requirement:

All Pods created in the cluster must have the

test-for-kyverno/namelabel.Violating this policy will block resource creation.

An example ClusterPolicy (require-label.yaml) to meet this requirement can be written as follows:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-label

spec:

validationFailureAction: Enforce # Block resource creation if policy is violated

rules:

- name: check-for-name-label

match:

resources: # Specify the resource kinds the policy applies to

kinds:

- Pod

validate:

message: "The label test-for-kyverno/name is required."

pattern:

metadata:

labels:

test-for-kyverno/name: "?*" # Check only for the existence of this labelAnd the following two Deployment YAML examples are test code that will fail and pass this policy, respectively.

Deployment that violates the policy (test-validate-fail.yaml):

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment-fail

namespace: kyverno-demo

spec:

replicas: 1

selector:

matchLabels:

app: my-test-app

template:

metadata:

# Creation will be denied by the validate policy because the 'test-for-kyverno/name' label is missing

labels:

app: my-test-app

spec:

containers:

- name: nginx

image: nginx:latestDeployment that follows the policy (test-validate-success.yaml):

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment-success

namespace: kyverno-demo

spec:

replicas: 1

selector:

matchLabels:

test-for-kyverno/name: my-successful-app

template:

metadata:

# Passes the validate policy because the 'test-for-kyverno/name' label is present

labels:

test-for-kyverno/name: my-successful-app

spec:

containers:

- name: nginx

image: nginx:latestAs you can see from the test Deployment YAML code, we plan to deploy our test resources to the kyverno-demo Namespace, so please create it first by running kubectl create ns kyverno-demo.

For this lab, I created a separate folder and saved all the policy example code in a policies folder, and the test resource files in another resources folder. Now, before actually deploying the policy, let's test how it applies using the Kyverno CLI command below.

kubectl kyverno apply {Policy_File_Name} -r {Test_Resource_Path}If you add the -t option to the end of the command above, the policy test results will be displayed in an easy-to-read table format, as shown below.

Just as we intended, the test-validate-fail.yaml resource results in a policy violation, while the test-validate-success.yaml resource passes the policy. Now, let's actually deploy the policy to the cluster and then deploy the test resources.

Deploy the require-label.yaml policy with the following command:

⚠️Caution for applying ClusterPolicy!

This ClusterPolicy that we'll be deploying will force all Pods deployed in a cluster to include a specific label, so be sure to do this on a lab cluster.

If you already have other Pods running in your cluster, they may be affected by the ClusterPolicy.

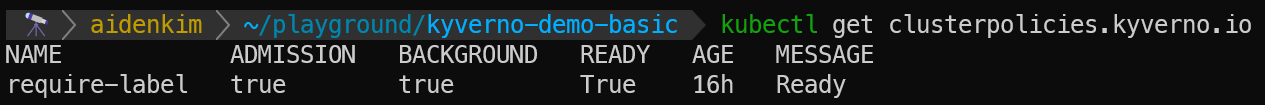

kubectl apply -f policies/require-label.yamlYou can view the Kyverno ClusterPolicy deployed with kubectl as follows:

Next, let's try to deploy the test-validate-fail.yaml resource, which failed the Kyverno CLI test earlier, using the kubectl apply command.

As you can see, the resource deployment was blocked. It also displays the informational message we specified in the message field of our validate rule! This shows that Kyverno's validate rule informs the user about policy violations before resource creation and guides them to comply with the policy.

Core Feature 2 - Automatic Resource Modification (Mutate)

The next rule we'll look at is mutate. When a developer or operator creates a resource in a Kubernetes cluster and omits a required field, the mutate rule can automatically set a default value.

For example, if the imagePullPolicy field of a Pod is not specified, it can automatically insert the value IfNotPresent, or it can automatically add resources/requests values to all Pods. This rule is utilized to enhance the stability and resource efficiency of the cluster.

Let's get hands-on with the mutate rule. Let's assume we have the following requirement:

Whenever a new Pod is created in any cluster, it must have an annotation called

managed-by.The default value for the

managed-byfield iskyverno.

A ClusterPolicy (add-annotation.yaml) that meets the above requirement can be written as follows:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: add-annotation-simple

spec:

rules:

- name: add-managed-by-annotation

match:

resources:

kinds:

- Pod

mutate:

patchStrategicMerge: # Merge the resource content below into the created resource content

metadata:

annotations:

# If the 'managed-by' annotation is missing(+), add the specified value(kyverno)

+(managed-by): kyvernoWe'll use the same test Deployment YAML files from 'Core Feature 1' for this exercise. First, let's use the Kyverno CLI's apply command to test if the mutate rule defined in add-annotation.yaml works correctly. We already went over the kyverno apply command format in 'Core Feature 1', right?

When you run the command, you can see that the mutate rule automatically adds the kyverno value for the managed-by annotation to both Deployments. Note that since kyverno apply runs in the terminal, the actual Deployment YAML files are not modified by this command.

Now, let's deploy this ClusterPolicy to our lab cluster and then deploy the Deployment from the test-validate-success.yaml file. (Since we've already deployed the ClusterPolicy named require-label to the cluster, we can't deploy the Deployment of the test-validate-fail.yaml unless we add the test-for-kyverno/name label into the Deployment manifest.)

First, deploy the policy from the add-annotation.yaml file.

Then, deploy the Deployment from the test-validate-success.yaml file and check the deployed Deployment's specification with the kubectl describe command. Remember that the managed-by annotation is not specified in the current example YAML file!

The Deployment from test-validate-success.yaml was deployed successfully, and the kyverno value for the managed-by annotation was automatically added! This confirms that the mutate rule can ensure the consistency of resource specifications by automatically adding default values for fields that must be specified in Kubernetes resources.

Core Feature 3 - Automatic Generation of Required Resources (Generate)

Finally, let's look at Kyverno's core feature, the generate rule. This feature automatically creates other resources that are necessary but would be repetitive to create manually, whenever a specific resource is created.

For example, whenever a new user is created, it can automatically create a default Role and RoleBinding for that user. It's a useful rule for automating complex configuration processes while maintaining consistency.

Let's assume we have the following requirement:

Whenever a new

Namespaceis created, automatically create aNetworkPolicyfor it.This

NetworkPolicywill, by default, block all outgoing traffic from all Pods belonging to thatNamespace.

A ClusterPolicy (generate-network-policy.yaml) that meets this requirement can be written as follows:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: generate-network-policy

spec:

rules:

- name: generate-default-deny-policy

# Define that this rule is triggered by the creation of a Namespace

match:

resources:

kinds:

- Namespace

# When the above match condition is met, create the resource below

generate:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

name: default-deny-all # Name of the NetworkPolicy to be created

namespace: "{{request.object.metadata.name}}" # Use the name of the triggered Namespace

synchronize: true # If the Namespace is deleted, this NetworkPolicy will also be deleted

data:

spec:

# Apply this policy to all Pods in the Namespace

podSelector: {}

# Block all outgoing (Egress) traffic

policyTypes:

- EgressLet's deploy this ClusterPolicy directly to the cluster.

And now, let's create a Namespace named test-for-generate-rule.

If we check the NetworkPolicy of that Namespace...

The NetworkPolicy named default-deny-all which we defined in the ClusterPolicy of the generate-network-policy.yaml was automatically created! And by looking at the values of the generate.kyverno.io/policy-name and generate.kyverno.io/rule-name labels, we can also see which Kyverno policy and rule caused its creation.

In addition to the example we've seen, other resources like ConfigMap or Secret that need to be created but could become repetitive tasks can also be generated at once with Kyverno's generate rule.

Wrapping Up

Since Kyverno's ClusterPolicy is a resource that affects the entire cluster, I recommend removing all the policies you deployed to your minikube cluster. You can remove them all at once with the familiar kubectl delete command.

kubectl delete clusterpolicy require-label add-annotation-simple generate-network-policy

We have now taken a detailed look at and completed a hands-on guide for Kyverno's three core features and rules. The validate, mutate, and generate rules are powerful on their own, but they can be combined to create even more sophisticated and effective policies.

It's possible to implement complex scenarios where, when a specific resource is created, mutate adds default values, validate checks other required conditions, and then generate creates related auxiliary resources along with it. Since this article was written for those just starting with Kyverno, we didn't get into such advanced scenarios, but I hope to have the opportunity to cover them in the future.

By following this hands-on guide, I believe you have now acquired a basic knowledge of Kyverno and Policy as Code. If you have any further questions or topics you'd like me to cover on this subject, please feel free to leave them in the feedback form below.

I'll be back with another interesting topic next time. Thank you

✨Enjoyed this issue?

How about this newsletter? I’d love to hear your thought about this issue in the below form!

👉 Feedback Form

Your input will be help improve Aiden’s Lab Notebook and make it more useful for you.

Thanks again for reading this notebook from Aiden’s Lab :)